In today’s hyper-connected world, digital platforms are no longer just tools—they’re the spaces where we work, play, and connect. But with this unprecedented scale of interaction comes an equally unprecedented challenge: keeping these platforms safe, inclusive, and trustworthy.

Over the past few years, content moderation has become a lightning rod for debate. From high-profile voices in tech, venture capital, and politics using it to push personal agendas, to anonymous profiles flooding comment threads, the conversation has become increasingly polarised. On one end, you have champions of free speech and expression, on the other, advocates for diversity, equity, and inclusion. Algorithms, of course, amplify the loudest voices on both sides.

Add to this the drama of Elon Musk’s takeover of Twitter (now X) and Facebook’s rollback of its fact-checking policies, and you might think the era of content moderation is fading. But the numbers tell a different story. According to Statista in 2024 alone, the digital world saw staggering activity: 251.1 million emails sent, 138.9 million Reels played on Facebook and Instagram, and 5.9 million Google searches performed—every single minute. Social media platforms continue to dominate online engagement, with Facebook leading the charge at over three billion monthly active users. Meanwhile, TikTok, a relative newcomer, amassed 186 million downloads in the fourth quarter of 2024 alone.

With this scale of activity, content moderation isn’t just a nice-to-have—it’s a necessity. And while the rhetoric around moderation has been focused on your aunt’s post violating community guidelines on your local community Facebook page, the reality is far more complex. Every minute, millions of posts, videos, and comments are uploaded. While most of this content is harmless, a small percentage can cause significant harm—whether it’s misinformation, hate speech, or explicit material.

Why AI Isn’t Enough

Content moderation is one of the thorniest challenges facing social media platforms today. A fascinating study by Clune and McDaid (2024) dives deep into how platforms like Facebook, Twitter, and YouTube are building accountability systems to manage harmful content and shape user behavior in the digital space. Their research sheds light on the intricate processes behind moderation and the gaps that still need to be addressed.

Content moderation begins with establishing community standards—rules that define acceptable behavior on a platform. These standards serve as the foundation for creating safe and inclusive digital spaces, ensuring users understand the boundaries of acceptable content.

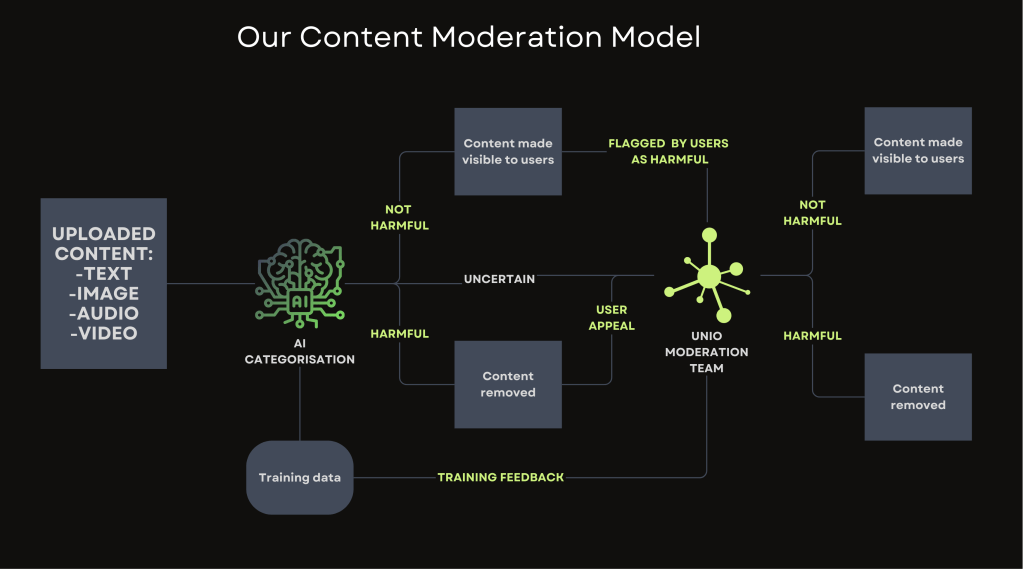

Social media organisations rely heavily on machine learning models to enforce these community standards at scale. These automated systems excel at detecting severe violations, such as child exploitation and terrorism, before they reach users. For example, Facebook achieved a 95.6% proactive detection rate for hate speech violations in Q1 2022, at the time of the study, a percentage point which will have only improved since with the optimisation of its AI models over time. However, machine learning models have their limitations. They often struggle with nuanced content, such as sarcasm, cultural context, or lesser-used dialects, and rely on user reporting for more subjective violations. This process, known as “reactive detection,” involves flagged content being reviewed and annotated by human moderators. This annotated data is then fed back into the AI pipeline to further enhance its knowledge of these cultural nuances.

Bridging the Gap Between Automation and Human Expertise

While machine learning models are critical for handling the sheer volume of content generated daily, they cannot fully replace the empathy, judgment, and contextual understanding that human moderators bring to the table. This is where UNIO Global’s expertise in ethical, high-quality data annotation and moderation makes a difference. By providing well-trained human moderators and robust data annotation pipelines, UNIO helps platforms address the limitations of automated systems in the following ways:

- Cultural and Linguistic Nuance: Automated systems often miss linguistic and contextual subtleties. Having employed over 30,000 annotators across Asia, Africa, South America and Europe, UNIO Global ensures moderation efforts are accurate and culturally informed. From Bantu languages spoken across Africa to dialects spread across the Indonesian archipelago, our teams bring deep cultural and linguistic expertise to ensure moderation efforts are both accurate and contextually sensitive.

- Strategically Aligned Project Management: In 2024, 60% of social media users were in the Asia-Pacific region, India is poised to overtake China by 2028 with the most users and Nigeria is tipped to lead global growth in the sector across this same time period. It is for this reason UNIO Global has strategically developed project teams in these regions which are supported by our globally situated project managers. This allows us to seamlessly integrate with User Trust & Safety teams to deliver scalable, contextually aligned solutions.

- Optimising AI Through Human Insight: UNIO’s annotators refine AI systems by labeling and categorising content. This data feeds back into AI pipelines, improving detection algorithms and enhancing precision over time.

Final Thoughts

Content moderation is no longer just a reactive process—it’s a strategic imperative for building trust and fostering safe, inclusive digital spaces. With the sheer scale of online activity and the complexity of harmful content, platforms need more than just algorithms, they need a thoughtful, human-first approach. At UNIO Global, we bridge the gap between technology and humanity, delivering culturally nuanced, scalable, and precise solutions. By integrating seamlessly with AI development teams and leveraging our global expertise, we’re not just moderating content—we’re shaping a safer, more connected digital future.